The Intricacies of AI's Struggles with Counting Letters: "count the number of "r" in the word strawberry"

AI's challenge in counting the letter "r" in the word "strawberry" may seem trivial, but it actually reveals significant insights into the limitations and complexities of current AI models. This issue, far from being a mere technical curiosity, underscores the nuanced interplay between how AI processes language and how it handles tasks that require precision at the character level.

Tokenization: The Foundation of the Problem

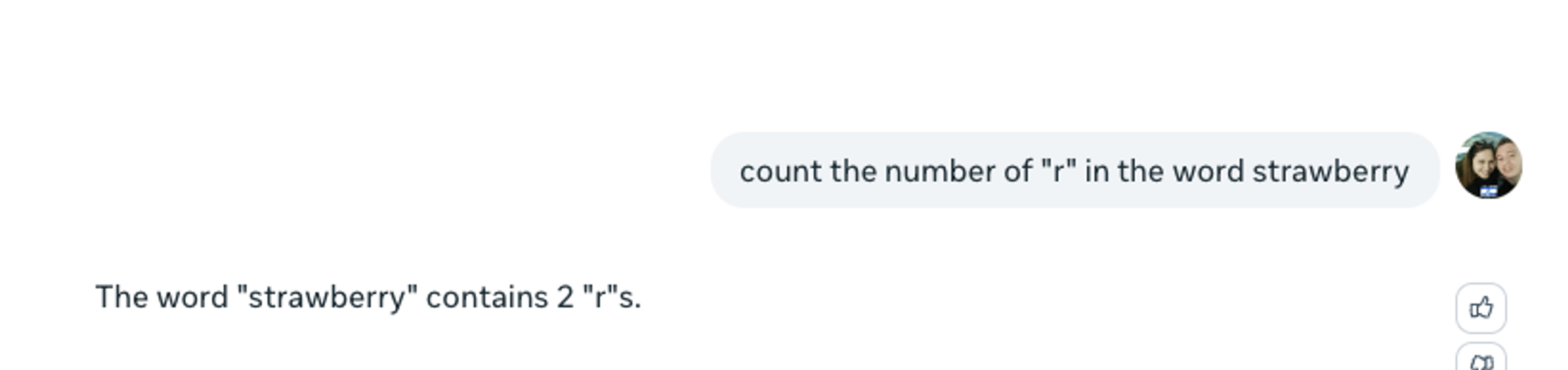

Large Language Models (LLMs) like GPT-4 operate on a fundamental process known as tokenization. In this process, text input is divided into smaller units called tokens. Depending on the model's design and training data, these tokens can represent individual characters, syllables, or entire words. For instance, when the word "strawberry" is input into the model, it might be broken down into tokens such as "straw" and "berry."

This segmentation is advantageous for understanding and generating text, as it allows the model to process language more efficiently by recognizing familiar patterns. However, it also introduces a significant drawback: the model might process these tokens independently, causing it to miscount characters like "r" because it does not view the word as a single, cohesive unit. This tokenization process, while crucial for complex language tasks, becomes a hindrance in tasks requiring simple, character-level operations(

OpenAI Developer Forum,Bionic Teaching).

Semantic Prioritization and Its Consequences

Another reason behind the AI's difficulty in counting specific characters lies in its design. LLMs prioritize semantic understanding—focusing on the meaning and context of words over their exact composition. This prioritization helps the model excel in tasks like text generation and comprehension but can lead to inaccuracies when performing tasks that require attention to detail, such as counting letters.

For example, when asked to count the "r"s in "strawberry," the model might interpret the task as one of understanding rather than a strict counting exercise. It could default to recognizing "straw" and "berry" as separate entities, overlooking the exact number of "r"s in the process. This behavior reflects the model's emphasis on higher-order language functions, sometimes at the expense of more basic, yet critical, tasks(

The Critical Role of Prompt Design

The way in which instructions are given to an AI model can significantly impact its performance. Prompt engineering—the technique of crafting prompts to elicit specific behaviors from AI—can sometimes mitigate these issues. For example, explicitly instructing the model to "spell out the word 'strawberry' letter by letter and count each 'r'" might yield a more accurate result than a simple query like "how many 'r's are in 'strawberry'?"

This approach works because it forces the AI to engage in a step-by-step process, making it more likely to focus on individual characters rather than processing the word as a whole based on patterns. However, even with carefully designed prompts, the model might still struggle due to its inherent design, which is not optimized for such granular tasks(

Bionic Teaching,OpenAI Developer Forum).

Broader Implications for AI Development

The issue of counting "r"s in "strawberry" is not just a trivial glitch; it highlights a broader challenge in AI development. Current AI models, while powerful in understanding and generating human-like language, are still limited in their ability to handle tasks that require precision at the character level. This limitation is a reminder of the ongoing need for refining AI systems to make them more versatile and capable of handling both complex and simple tasks with equal proficiency.

Moreover, this problem illustrates a fundamental difference between human and machine processing. Humans can effortlessly switch between understanding the meaning of a sentence and counting the letters within a word. In contrast, AI models, as sophisticated as they are, still struggle to bridge this gap, pointing to areas where future research and development are needed(

Future Directions: Balancing Precision and Comprehension

As AI continues to evolve, addressing these limitations will be crucial for creating more robust and reliable systems. Enhancements in how models handle tokenization, combined with more sophisticated prompt engineering, could improve their ability to perform character-level tasks. Additionally, developing models that can seamlessly integrate semantic understanding with syntactic precision will be key to overcoming the current challenges faced by AI in seemingly simple tasks like counting letters.

By focusing on these areas, researchers and developers can create AI systems that are not only powerful in language comprehension but also precise and reliable in all aspects of text processing. This will ultimately lead to more versatile AI applications capable of handling the full spectrum of tasks required in real-world scenarios(

Lexi Shield: A tech-savvy strategist with a sharp mind for problem-solving, Lexi specializes in data analysis and digital security. Her expertise in navigating complex systems makes her the perfect protector and planner in high-stakes scenarios.

Chen Osipov: A versatile and hands-on field expert, Chen excels in tactical operations and technical gadgetry. With his adaptable skills and practical approach, he is the go-to specialist for on-ground solutions and swift action.

Lexi Shield: A tech-savvy strategist with a sharp mind for problem-solving, Lexi specializes in data analysis and digital security. Her expertise in navigating complex systems makes her the perfect protector and planner in high-stakes scenarios.

Chen Osipov: A versatile and hands-on field expert, Chen excels in tactical operations and technical gadgetry. With his adaptable skills and practical approach, he is the go-to specialist for on-ground solutions and swift action.